An \(MA(q)\) process is comprised of a finite summation of stationary white noise terms. Hence, an \(MA(q)\) process will be stationary with a time-invariante mean and autocovariance.

The mean and variance of \(\{x_t\}\) are easily derived. The mean must be zero, because each term is a sum of scaled white noise terms with mean zero.

The variance of an \(MA(q)\) process is \({ \sigma_w^2 \left( 1 + \beta_1^2 + \beta_2^2 + \beta_3^2 + \cdots + \beta_{q-1}^2 + \beta_q^2 \right) }\). This can be seen, because the white noise terms are independent with the same variance.

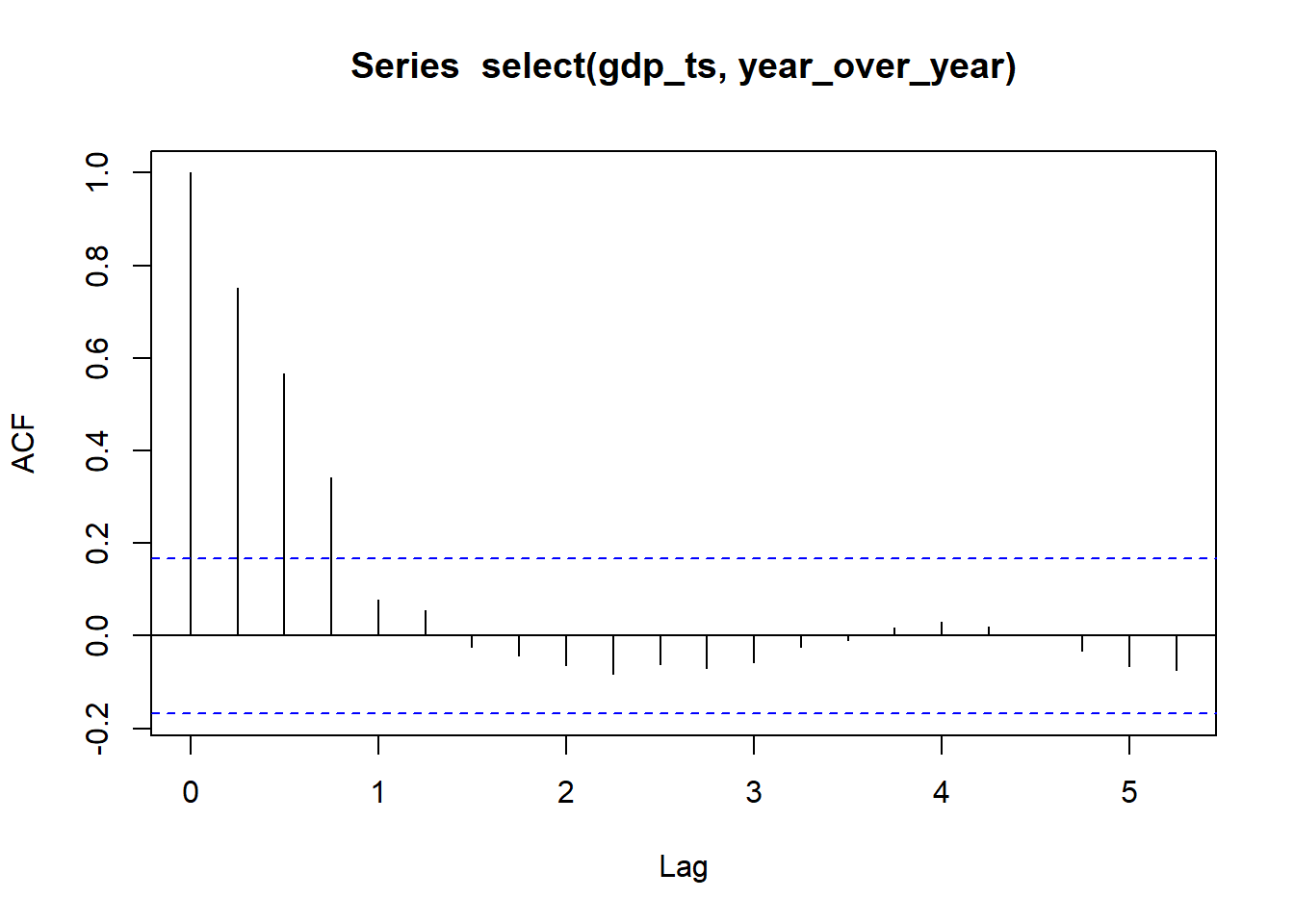

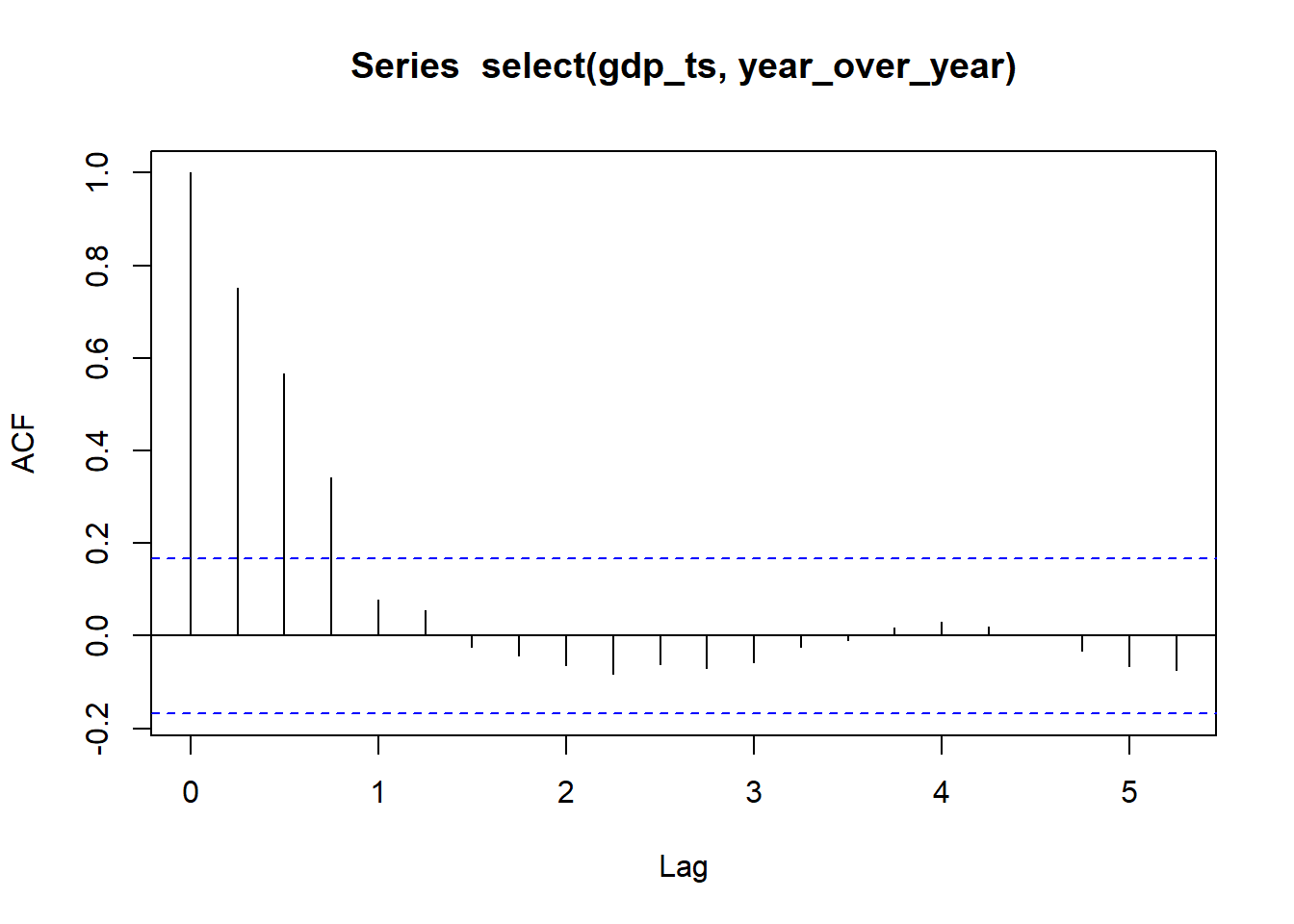

So, the autocorrelation function is

\[

\rho(k) =

cor(x_t, x_{t+k}) =

\begin{cases}

1, & k=0 \\

~\\

\dfrac{ \sum\limits_{i=0}^{q-k} \beta_i \beta_{i+k} }{ \sum\limits_{i=0}^q \beta_i^2 }, & k = 1, 2, \ldots, q \\

~\\

0, & k > q

\end{cases}

\] where \(\beta_0 = 1\).

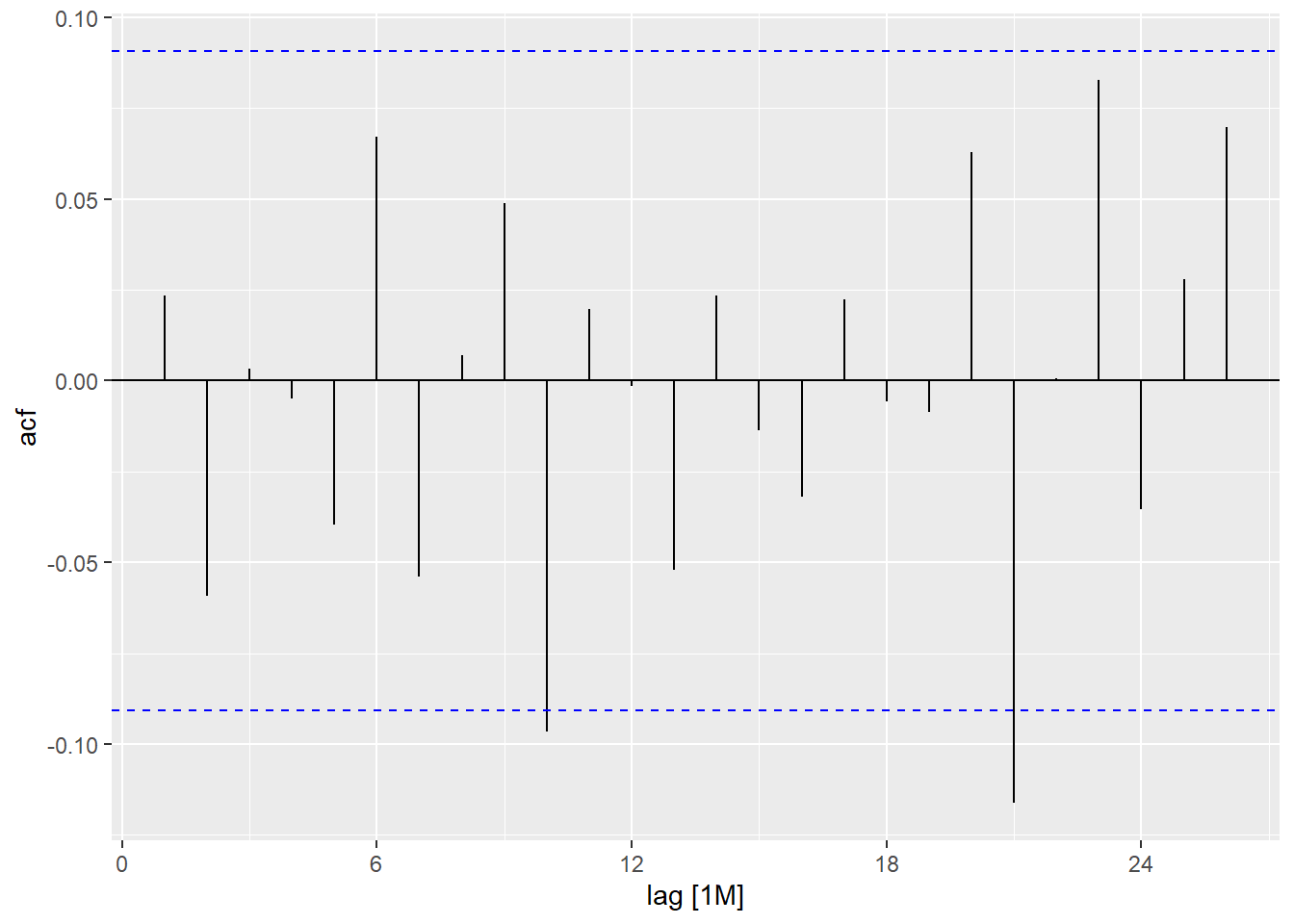

Note that the autocorrelation function is zero if \(k>q\), because \(x_t\) and \(x_{t+k}\) would be independent weighted summations of white noise processes and hence the covariance between them would be zero.