Lesson 8: Review for Exam 1

Unit 1 introduced you to some foundational ideas in statistical theory. A firm grasp of these concepts will allow you to connect deeply with the statistical tools that you will be presented with in Units 2, 3, and 4. Use this page to review the Lesson Outcomes and Summaries in preparation for the Unit 1 Exam. If there are any lesson outcomes that you are not confident with, return to that lesson and review those outcomes in detail.

Lesson 1

Outcomes

- Explain the course policies

- Access course resources (course outline, lesson schedule, preparation activities, reading quizzes, homework assignments, assessments, etc.)

- Communicate with the instructor and group members

- Access statistical analysis software tools for class quizzes, assignments, and exams

- Apply principles of the gospel of Jesus Christ in this class

- Apply the three rules of probability for different probability scenarios

Summary

- In this class you will use the online textbook that has been written for you by your statistics teachers. All of the assignments and quizzes, available in I-Learn, will be based on the readings, so study it well. Most weeks will cover two lessons.

- You have successfully located the online textbook. Ensure you have also located the course in I-Learn and can access the quizzes and assignments that are there.

- Ensure you have located the contact information for your instructor in the I-Learn course. Recording the contact information of peers from class would also be a wise idea.

- This course uses MS Excel for all statistical analysis. Check that you have access to the software on your computer. If not, see I-Learn for details on how to obtain it through the University for free.

- By doing the work, staying on schedule, and living the Honor Code you will succeed in this class.

- The three rules of probability are:

- A probability is a number between 0 and 1. \[0 \leq P(X) \leq 1\]

- If you list all the outcomes of a probability experiment (such as rolling a die) the probability that one of these outcomes will occur is 1. In other words, the sum of the probabilities in any probability is 1. \[\sum P(X) = 1\]

- The probability that an outcome will not occur is 1 minus the probability that it will occur. \[P(\text{not}~X) = 1 - P(X)\]

Lesson 2

Outcomes

- Describe the five steps of the Statistical Process

- Distinguish between an observational study and an experiment

- Differentiate between a population and a sample

- Describe each of the following sampling schemes:

- Simple random sampling

- Stratified sampling

- Systematic sampling

- Cluster sampling

- Convenience sampling

- Explain the importance of using random sampling

- Distinguish between a quantitative and a categorical variable

Summary

The Statistical Process has five steps: Design the study, Collect the data, Describe the data, Make inference, Take action. These can be remembered by the pneumonic “Daniel Can Discern More Truth.”

In a designed experiment, researchers control the conditions of the study, typically with a treatment group and a control group, and then observe how the treatments impact the subjects. In a purely observational study, researchers don’t control the conditions but only observe what happens.

The population is the entire group of all possible subjects that could be included in the study. The sample is the subset of the population that is actually selected to participate in the study. Statistics use information from the sample to make claims about what is true about the entire population.

There are many sampling methods used to obtain a sample from a population. The best methods use some sort of randomness (like pulling names out of a hat, rolling dice, flipping coins, or using a computer generated list of random numbers) to avoid bias.

- A simple random sample (SRS) is a random sample taken from the full list of the population. This is the least biased (best) sampling method, but can only be implemented when a full list of the population is accessible.

- A stratified sample divides the population into similar groups and then takes an SRS from each group. The main reason to use this sampling method is when a study wants to compare and contrast certain groups within the population, say to compare freshman, sophomores, juniors, and seniors at a university.

- A systematic sample samples every kth item in the population, beginning at a random starting point. This is best applied when subjects are lined up in some way, like at a fast food restaurant, an airport security line, or an assembly line in a factory.

- A cluster sample consists of taking all items in one or more randomly selected clusters, or blocks. For example, ecologists could draw grids on a map of a forest to create small sampling regions and then sample all trees they find in a few randomly selected regions. Note that this differs from a stratified sample in that only a few sub-groups (clusters) are selected and that all subjects within the selected clusters are included in the study.

- A convenience sample involves selecting items that are relatively easy to obtain and does not use random selection to choose the sample. This method of sampling can be assumed to always bring bias into the sample.

The best way to avoid bias when trying to make conclusions about a population from a single sample of that population is to use a random sampling method to obtain the sample.

Quantitative variables represent things that are numeric in nature, such as the value of a car or the number of students in a classroom. Categorical variables represent non-numerical data that can only be considered as labels, such as colors or brands of shoes.

Lesson 3

Outcomes

- Create a histogram from data

- Interpret data presented in a histogram

- Identify left-skewed, right-skewed, and symmetric distributions from histograms

- Calculate the mean, median, and mode for quantitative data using software

- Compare the centers of distributions using graphical and numerical summaries

- Describe the effects that skewness or outliers have on the relationship between the mean and median

- Distinguish between a parameter and a statistic

Summary

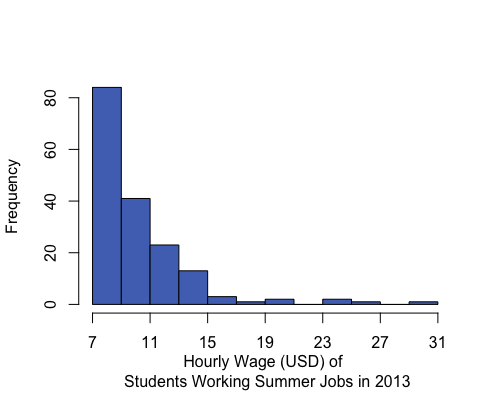

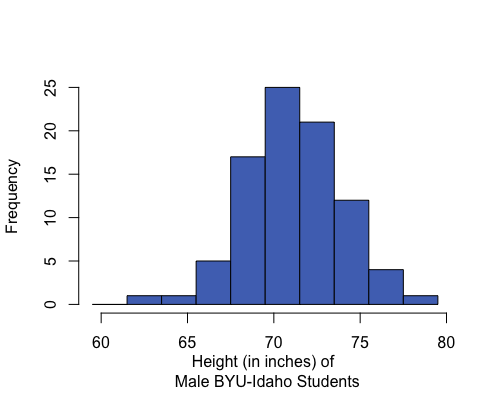

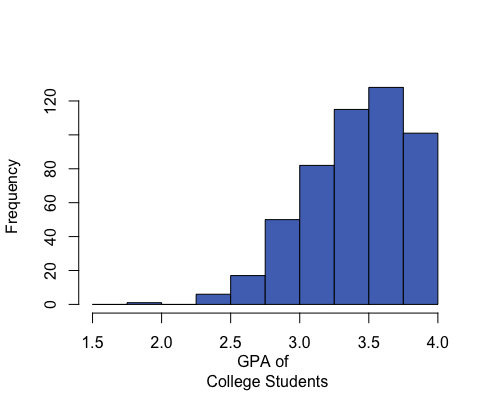

Histograms are created by dividing the number line into several equal parts, starting at or below the minimum value occurring in the data and ending at or above the maximum value in the data. The number of data points occurring in each interval (called a bin) are counted. A bar is then drawn for each bin so that the height of the bar shows the number of data points contained in that bin.

A histogram allows us to visually interpret data to quickly recognize which values are most common and which values are least common in the data.

Histograms can be left-skewed (the majority of the data is on the right of the histogram, less common values stretch to the left side), right-skewed (majority of the data is on the left side with less common values stretching to the right), or symmetrical and bell-shaped (most data is in the middle with less common values stretching out to either side).

The mean, median, and mode are measures of the center of a distribution. The mean is the most common measure of center and is computed by adding up the observed data and dividing by the number of observations in the data set. The median represents the 50th percentile in the data. The mean can be calculated in Excel using

=AVERAGE(...), the median by using=MEDIAN(...), and the mode by=MODE(...)where the...in each case consists of the cell references that highlight the data.When comparing the centers of distributions using graphical and numerical summaries, the direction of the skew showing in the histogram will generally correspond with the mean being pulled in that direction.

| Right-skewed | Symmetric & Bell-shaped | Left-skewed |

|---|---|---|

|

|

|

|

Mean: $10.45 Median: $9.04 Mean is to the right of the median. |

Mean: 71.1 inches Median: 71 inches Mean and median are roughly equal. |

Mean: 3.42 Median: 3.45 Mean is to the left of the median. |

In a symmetrical and bell-shaped distribution of data, the mean, median, and mode are all roughly the same in value. However, in a skewed distribution, the mean is strongly influenced by outliers and tends to be pulled in the direction of the skew. In a left-skewed distribution, the mean will tend to be to the left of the median. In a right-skewed distribution, the mean will tend to be to the right of the median.

A parameter is a true (but usually unknown) number that describes a population. A statistic is an estimate of a parameter obtained from a sample of the population.

Lesson 4

Outcomes

- Calculate a percentile from data

- Interpret a percentile

- Calculate the standard deviation from data

- Interpret the standard deviation

- Calculate the five-number summary using software

- Interpret the five-number summary

- Create a box plot using software

- Determine the five-number summary visually from a box plot

Summary

A percentile is calculated in Excel using

=PERCENTILE(..., 0.#)where the0.#is the percentile written as a decimal number. So the 20th percentile would be written as 0.2.A percentile is a number such that a specified percentage of the data are at or below this number. For example, if say 80% of college students were shorter than (or equal to) 70 inches tall in height, then the 80th percentile of heights of college students would be 70 inches.

Standard deviation is calculated in Excel for a sample of data using

=STDEV.S(...).The standard deviation is a number that describes how spread out the data typically are from the mean of that data. A larger standard deviation means the data are more spread out from their mean than data with a smaller standard deviation. The standard deviation is never negative. A standard deviation of zero implies all values in the data set are exactly the same.

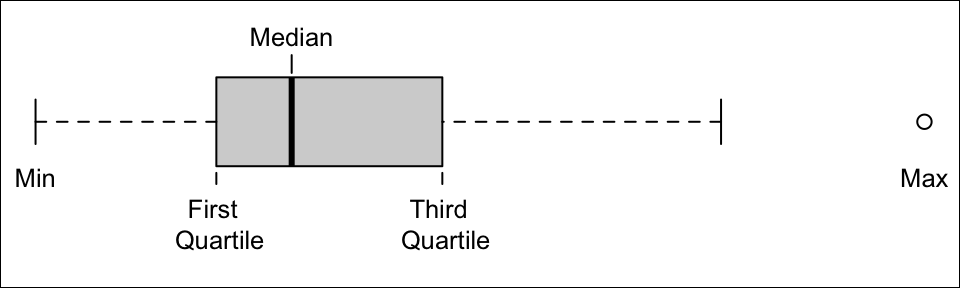

To compute any of the five-number summary values in Excel, use the Excel function

=QUARTILE.INC(..., #)where#is either a 0 (gives the minimum), 1 (gives the first quartile), 2 (gives the second quartile, i.e., median), 3 (gives the third quartile), or 4 (gives the maximum).The five-number summary consists of (1) the minimum value in the data, (2) the first quartile (25th percentile) of the data, (3) the median of the data (50th percentile), (4) the third quartile (75th percentile) of the data, and (5) the maximum value occurring in the data.

To create a boxplot in Excel, highlight the data, go to Insert on the menu ribbon, choose the histogram icon, select the Boxplot option from the menu that appears.

Boxplots are a visualization of the five-number summary of a data set.

Lesson 5

Outcomes

- State the properties of a normal distribution

- Calculate the z-score for an individual observation, given the population mean and standard deviation

- Interpret a z-score

- Use the normal distribution to calculate probabilities for one observation

- Approximate probabilities from a normal distribution using the 68-95-99.7 rule

- Calculate a percentile using the normal distribution

Summary

A normal density curve is symmetric and bell-shaped with a mean of \(\mu\) and a standard deviation of \(\sigma\). The curve lies above the horizontal axis and the total area under the curve is equal to 1. A standard normal distribution has a mean of 0 and a standard deviation of 1.

A z-score is calculated as: \(\displaystyle{z = \frac{\text{value}-\text{mean}}{\text{standard deviation}} = \frac{x-\mu}{\sigma}}\)

A z-score tells us how many standard deviations above (\(+Z\)) or below (\(-Z\)) the mean (\(\mu\)) a given value (\(x\)) is.

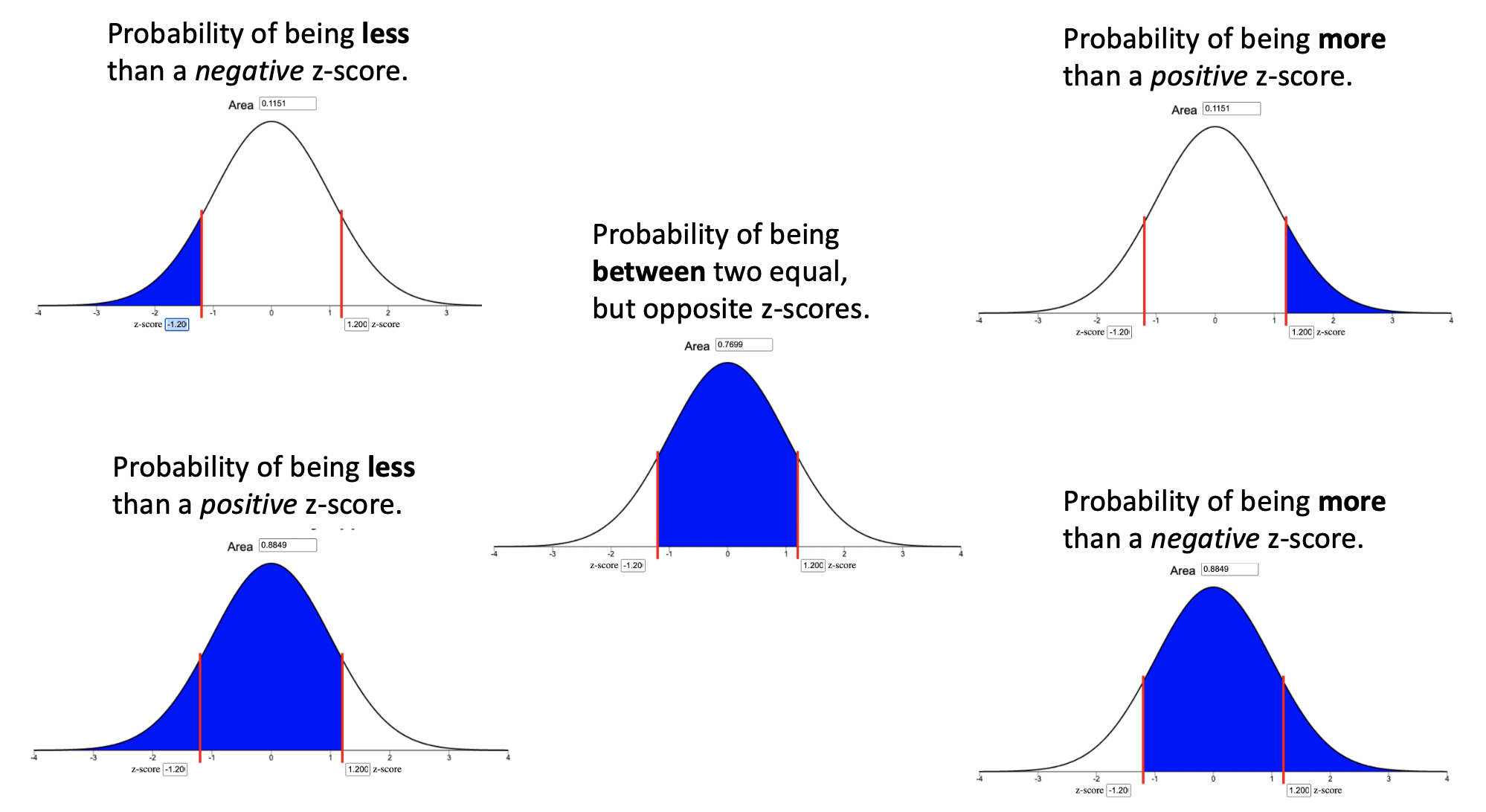

To calculate probabilities for an observation \(x\), calculate the \(z\)-score using \(\mu\), \(\sigma\), and \(x\) and then use the Normal Probability Applet to shade the appropriate area of the distribution for the desired probability. The area shaded depends on both the direction of interest (above, below, between) and the sign of the z-score as depicted in the images below. In every case, the probability is given by the Area box at the top of the applet.

The 68-95-99.7% rule states that when data are normally distributed, approximately 68% of the data lie within \(z=1\) standard deviation (\(\sigma\)) from the mean, approximately 95% of the data lie within \(z=2\) standard deviations from the mean, and approximately 99.7% of the data lie within \(z=3\) standard deviations from the mean. For example, this rule approximates that 2.5% of observations will be less than a z-score of \(z=-2\).

Percentiles can be calculated using the Normal Probability Applet by (1) shading the left tail only, (2) entering the desired percentile in the “Area” box, and (3) using the z-score from where the blue shaded region ends solve for \(x\) in the equation \(z=\frac{x-\mu}{\sigma}\).

Lesson 6

Outcomes

- Describe the concept of a sampling distribution of the sample mean

- State the Central Limit Theorem

- Determine the mean of the sampling distribution of the sample mean for a given parent population

- Determine the standard deviation of the sampling distribution of the sample mean for a given parent population

- Determine the shape of the sampling distribution of the sample mean for a given parent population

- State the Law of Large Numbers

Summary

The distribution of sample means is a distribution of all possible sample means (\(\bar x\)) for a particular sample size.

The Central Limit Theorem states that the sampling distribution of the sample mean will be approximately normal if the sample size \(n\) of a sample is sufficiently large. In this class, \(n\ge 30\) is considered to be sufficiently large.

The mean of the distribution of sample means is the mean \(\mu\) of the population: \(\mu_{\bar{x}} = \mu\).

The standard deviation of the distribution of sample means is the standard deviation \(\sigma\) of the population divided by the square root of \(n\): \(\sigma_{\bar{x}} = \sigma/\sqrt{n}\).

The distribution of sample means is normal in either of two situations: (1) when the data is normally distributed or (2) when, thanks to the Central Limit Theorem (CLT), our sample size (\(n\)) is large.

The Law of Large Numbers states that as the sample size (\(n\)) gets larger, the sample mean (\(\bar x\)) will get closer to the population mean (\(\mu\)). This can be seen in the equation for \(\sigma_{\bar{x}} = \sigma/\sqrt{n}\). Notice as \(n\) increases, then \(\sigma_\bar{x}\) will get smaller.

Lesson 7

Outcomes

- Calculate the z-score for a sample mean, given the population mean and standard deviation

- Use the normal distribution to calculate probabilities for a sample mean

Summary

A z-score for a sample mean is calculated as: \(\displaystyle{z = \frac{\text{value}-\text{mean}}{\text{standard deviation}} = \frac{\bar x-\mu}{\sigma/\sqrt{n}}}\)

When the distribution of sample means is normally distributed, we can use a z-score and the Normal Probability Applet to calculate the probability that a sample mean is above, below, between, or more extrem than some given value (or values).

Copyright © 2020 Brigham Young University-Idaho. All rights reserved.